Do AI dream of electric sheep? Yes, if we tell them to.

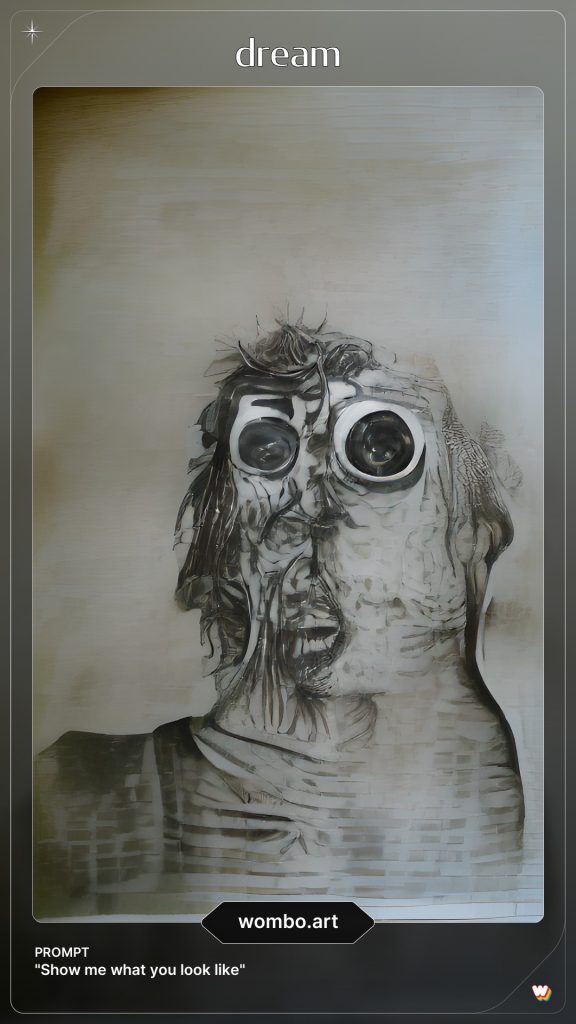

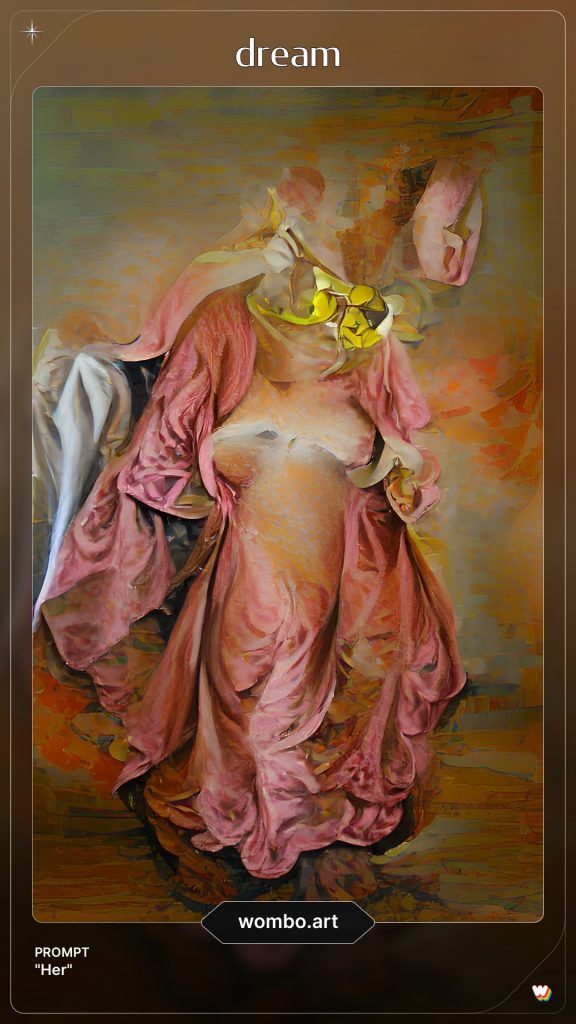

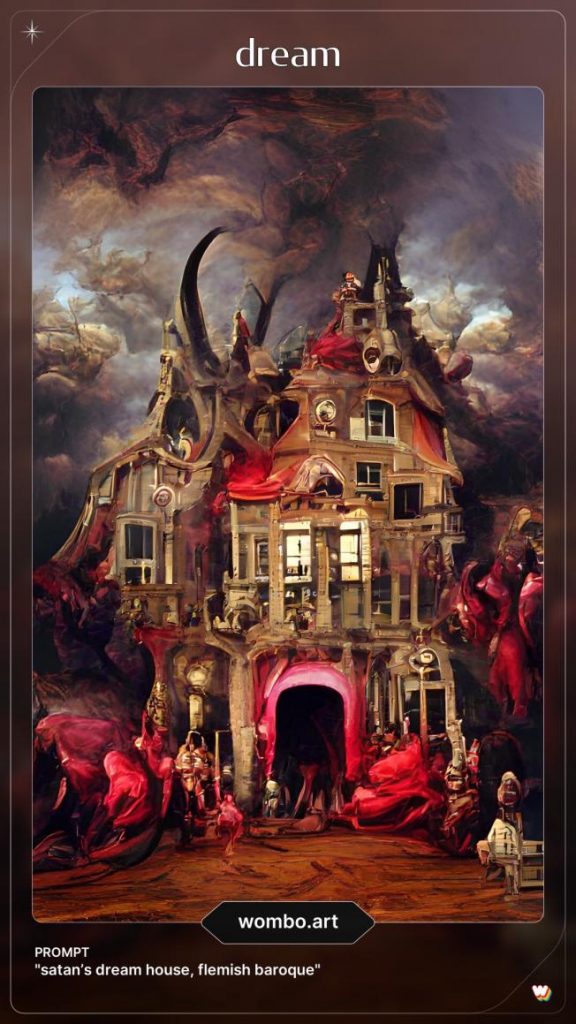

In fact, the electric sheep can look something like this:

These sheep were created by Dream, an app by WOMBO that uses artificial intelligence (AI) to generate intricate works of art. The user simply enters a prompt (or picks from Dream’s suggestions, like “Futuristic City,” “Crawling Brains,” or “Diamond Trees”), and selects an art style, like Dali, Fantasy, Ukiyo-e, or Steampunk. Then, they watch in real time as Dream creates an artwork based on these inputs. Users are so thrilled with their creations that vibrant communities have sprung up on social media and Discord, where they eagerly share their “dreams.”

“I’m a big fan of Italian Renaissance art,” Dream engineer Salman Shahid tells me in an interview, “and I think the first time I saw The School of Athens, I was just mesmerized. I asked WOMBO Dream what it thinks The School of Athens looks like in the Synth Wave style. That was pretty cool.”

Since joining the WOMBO team in July, Shahid has worked on the machine learning models of both of WOMBO’s applications: Dream, released in late 2021, and the company’s first app, WOMBO, which animates a user’s photo to lip sync a song of their choice. The team includes application designers, content creators, product managers, mobile and web app engineers, and AI engineers, like Shahid.

“We really value democratizing creativity using AI,” Shahid says. “We work on abstracting away the complexity and bringing really cool AI models to millions of users across the globe.”

The Dream algorithm uses a CLIP-guided approach. CLIP is an open-source neural network (a freely available algorithm that learns about patterns in data) by research lab OpenAI. The network is trained on image-caption pairings found on the internet, and can rate how well user-fed captions pertain to images.

To generate its artworks, the Dream model starts with a randomly-generated vector, a mathematical representation of an image. Each entry in the vector corresponds to a tiny detail of the image. Some might correspond to colour, some to shape, some to more abstract meaning. Often, the random vector first corresponds with a grey blob of pixels. The algorithm then uses CLIP to ascertain how well the current vector image corresponds to the user’s prompt and improves the correspondence over several iterations. The user watches the algorithm conduct this process in real time; the app creates multiple images before the final product appears. The randomness of the process means that Dream never produces the same artwork twice.

I ask Shahid why the app is called Dream. He says that the team cycled through a few different names, including “Paint” and “Art,” before settling on “Dream.” “We found it was a playful interpretation of how we believe AI dreams…. [The] internal representation is not something we specifically guide, where we say that one attribute should refer to one specific thing. It’s just something that the model learns during its training time…. The vector itself is not human-readable in any sense.”

WOMBO Dream recently expanded to offer multilingual support. Its model can now take prompts in a vast array of languages, including Japanese, Hindi, and Indonesian. In addition to offering more languages, the app will soon offer social features, such as the ability to see and “like” other users’ dreams if they choose to share them. The team is also experimenting with 3D animations of dreams so that users could walk through the dreams they create in virtual reality.

While playing with different art styles in the app, I found myself returning to Etching and Baroque. Etching seemed to essentialize the prompts, creating simple pieces with a nightmarish quality. Baroque, on the other hand, dazzled with convincing brushstrokes and the colour palette of the period.

Some of the images, however, made me wonder about the possible repercussions of an app that produces representations based on the frequency of caption-image pairings on the internet. Iterations of certain prompts showed how the language I used can be loaded with social connotations and cultural stereotypes: an iteration of “Clique” produced a group of eerie, pale faces in uniform, their bodies blending into one another’s. The negative connotations are obvious. An iteration of the pronoun “Her” produced a traditionally feminine, white body, wrapped in a billowing pink robe.

Iterations like these give us a first-hand glance into the current limits of AI creativity. As Shahid explains, “One factor that’s been commonly cited as this ‘special sauce’ that differentiates humans is, even with large scale [AI] models which perform super well and have near human-like performance, they don’t really have the ability to…innovate, or create new information beyond what they’ve already seen. They just summarize the information that they’ve already seen in novel ways…and I think for a long, long time, that’s going to continue to be the case.”

For some artists, the app enhances this “special sauce” of human creativity.

“With the advent of AI generated art, it’s going to empower artists to build cooler and cooler things,” Shahid enthuses. Many artists have told the team they use the app to generate inspiration for colour palettes or starting structures for their own artwork. Artists sharing animations of their dreams on the Dream Discord server inspired the 3D work the WOMBO Dream team is developing now.

Shahid says that the team is always taking user suggestions to create better models for more satisfying products. Another feature, soon-to-be released, is allowing user-inputted images from which the algorithm can take inspiration.

“I think overall, it’s a great tool to empower people,” Shahid reflects. “And I think that we’re in for a future with even more beautiful art.”